Introduction

I recently came across an really insightful article written by Mark Levis. In the article, he brought up several limitations of modern AI. Particularly one points I want to address:

Lack of Understanding and Common Sense

AI systems, despite their advanced capabilities in specific domains, lack a deep understanding of the world. They operate based on patterns learned from data without fully grasping the underlying concepts. Common-sense reasoning, intuitive understanding, and contextual awareness are areas where AI still falls short.

This point really attracts my attention, because I had so many similar “unconvinient” experiences with prompting AI. For example, one time I had this idea to let AI help me generate an animations for my website (The Hover Anamation for profile image). Even when I broken down the steps clearly, it still misunderstood my intentions. This miscommunication resulted in outputs far from what I expected, that I had to go back, repeatly interact and refine my goal.

The Growing Field of AI Prompting

I believe I’m not the only one standing in this struggle. Many others also have this issue when try to communicate effectively with AI for their desired results. This has led to the rise of a new profession: AI Prompt Engineering, where experts craft optimal prompts to extract precise outputs from AI systems.

Some developers even share their prompt templates online, such as the repo Thinking-Claude. These templates are incredibly useful but most of them are for technical development. For broader domains like: philosophy, daily routines, relationships, or even virtual coaching, these templates may not be the most suitable approach.

Long story short, there simply aren’t enough templates to cover every domain in life.

Moreover, not everyone has professional CS background to write complex prompts. For individuals who aren’t familiar in this field or who are older adults, using AI can feel inaccessible and feel being left behind.

A Solution: Guided Prompting

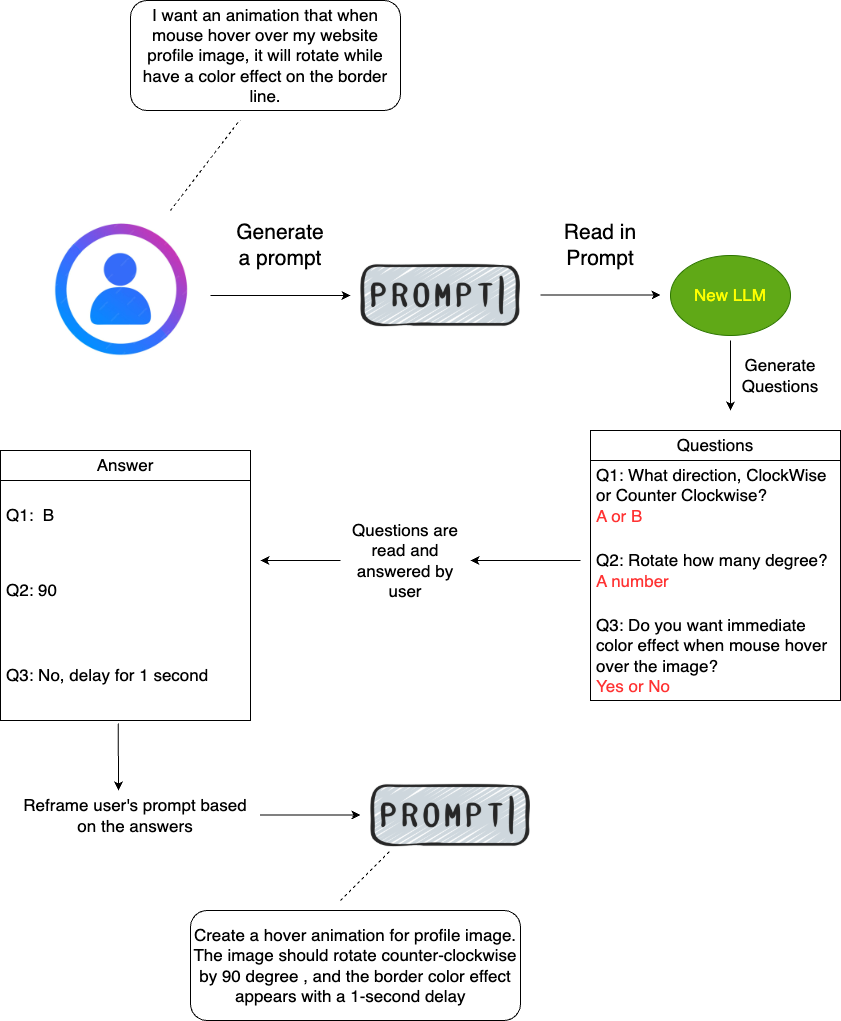

To address this issue, I propose developing a Large Language Model (LLM) that specifies user’s prompt. Here’s how it works:

- Users input their original prompt into the model.

- The LLM asks a series of guided questions based on the user’s input.

- These questions help refine the prompt, narrowing it down to ensure the AI delivers accurate and effective results.

For better clarity, I constructed a breakdown graph below.

Logic Diagram

Here’s a conceptual diagram of how the system would function:

Credit / Inspiration: Akinator

The idea for this project comes from a mind reading game called Akinator. In this game, players think of a character, and the app asks a series of questions to guess who it is. Similarly, the proposed LLM would ask targeted questions to clarify the user’s request.

However, unlike Akinator, where players may be uncertain about a character’s background, users of this LLM typically have a clear idea of the outcome they want. This clarity allows the LLM to focus its questions and guide users toward creating a highly specific prompt. The user simply answers “yes” or “no,” and the LLM refines the input step by step.

Conclusion

This is just my little thought on current AI, but there’re defintely more approaches, and we also have to take into account that allegedly some AI are degraded on purpose to avoid the violation of laws. So I’ll try my best to implement this LLM, hope one day it helps the society!